Learning to program

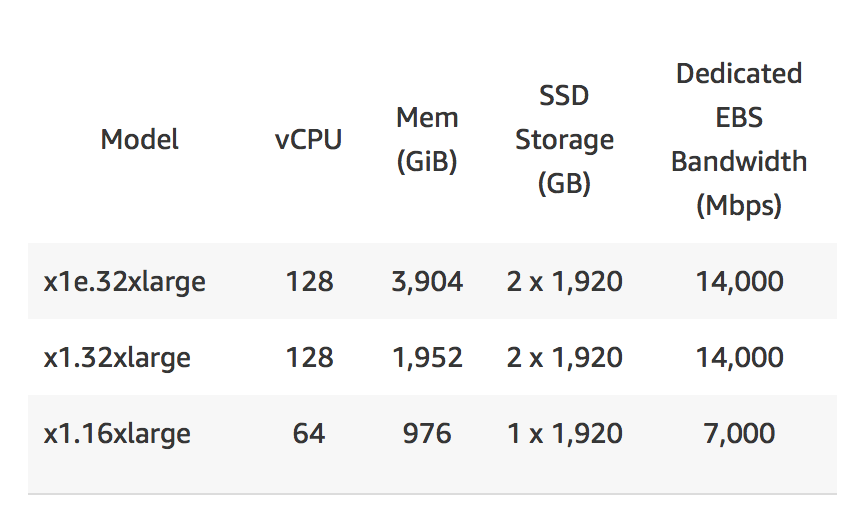

I had an interesting conversation at work today -- about how to learn to program.Back when dinosaurs roamed the earth you sat down at your computer and quickly learned something like BASIC. Once you outgrew that you were SOL unless you delved into assembly; those were the options. Before the dinosaurs, when only algae was around you poked the punched cards and maybe had a compiler. But the resource constraints were such that you had to understand the underlying concepts to get anything reasonable done.Now, you can get a PhD knowing nothing but Python or Java. Resources aren't constrained in the same way.Hell, you can spin up an EC2 instance on AWS with nearly 4TB of memory -- memory as in actual RAM. The idea that you need to conserve a damn thing has been thrown out the window. Just get a bigger machine. Have a problem, throw on another design pattern.I disagree with that.I think you should start with a simple language like Python, Ruby, or Java... but once you have the hang of that you really need to get dirty with C, or even more preferably assembly. You need to understand both the hows and whys of how the software functions. Things like pointers have been effectively abstracted away from what it takes to design a system, but understanding how memory works for real is fundamental to how a system functions.You can, of course, get deeper. You can take a look at how the processor processes for instance. Or how the TLB handle memory mapping. Hell, what is an-way associative cache and how can you best use it? It's all good to know, but I don't think it's as critical to the fundamentals.It is critical that the modules that you are using are not black boxes. Black boxes lead to magical thinking.Any sufficiently advanced technology is indistinguishable from magic.Magic is bad. The truth will set you free.

The idea that you need to conserve a damn thing has been thrown out the window. Just get a bigger machine. Have a problem, throw on another design pattern.I disagree with that.I think you should start with a simple language like Python, Ruby, or Java... but once you have the hang of that you really need to get dirty with C, or even more preferably assembly. You need to understand both the hows and whys of how the software functions. Things like pointers have been effectively abstracted away from what it takes to design a system, but understanding how memory works for real is fundamental to how a system functions.You can, of course, get deeper. You can take a look at how the processor processes for instance. Or how the TLB handle memory mapping. Hell, what is an-way associative cache and how can you best use it? It's all good to know, but I don't think it's as critical to the fundamentals.It is critical that the modules that you are using are not black boxes. Black boxes lead to magical thinking.Any sufficiently advanced technology is indistinguishable from magic.Magic is bad. The truth will set you free.